What is A/B Testing?

A/B testing, also known as split testing, is essentially an experiment that compares two versions of something to discover which version performs best for your audience based on measurements like clicks or conversions – in this example it’s email marketing.

In order to have a valid A/B test, you must first plan your hypothesis.

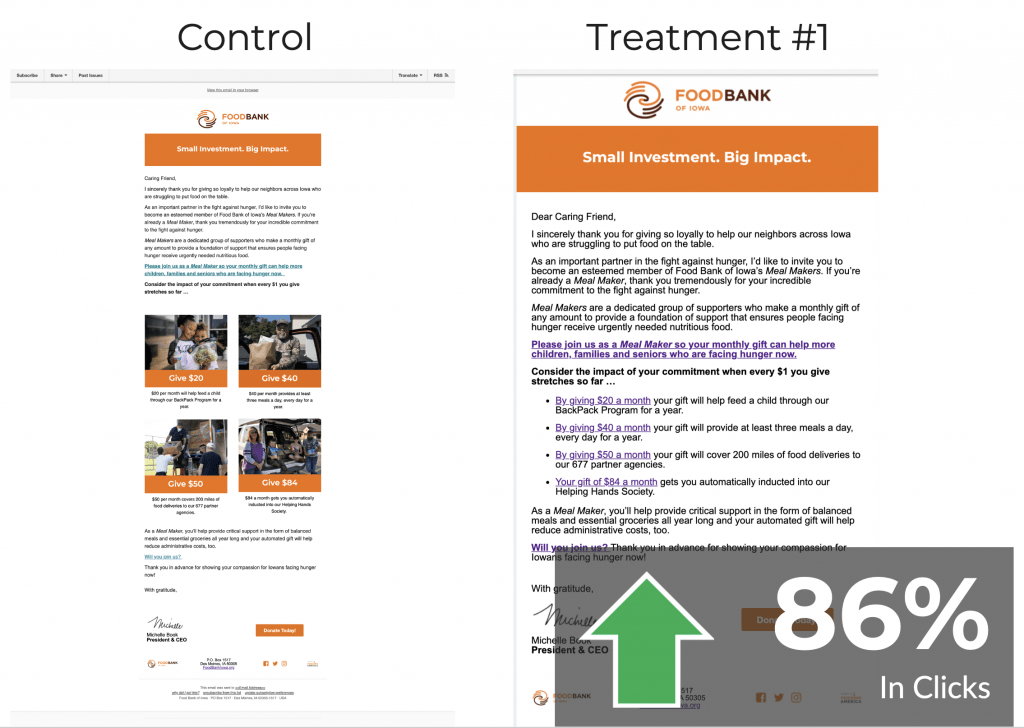

Let’s take a look at the example email below. In the control version of the email, images link to each specific ask amount, and the treatment version used hyperlinked bullet point text for the ask amounts instead. The hypothesis behind this test was that removing the images and replacing them with plain hyperlinked text would help supply clarity to the reader and lead to an increase in overall clicks.

In this case, the treatment with the hyperlinked plain text led to an 86 percent increase in clicks.

So, you might be asking, “How or What should I test when it comes to my email marketing program?” Below are four examples to consider when looking at A/B testing with email.

Test #1: HTML vs. Plain Text Emails

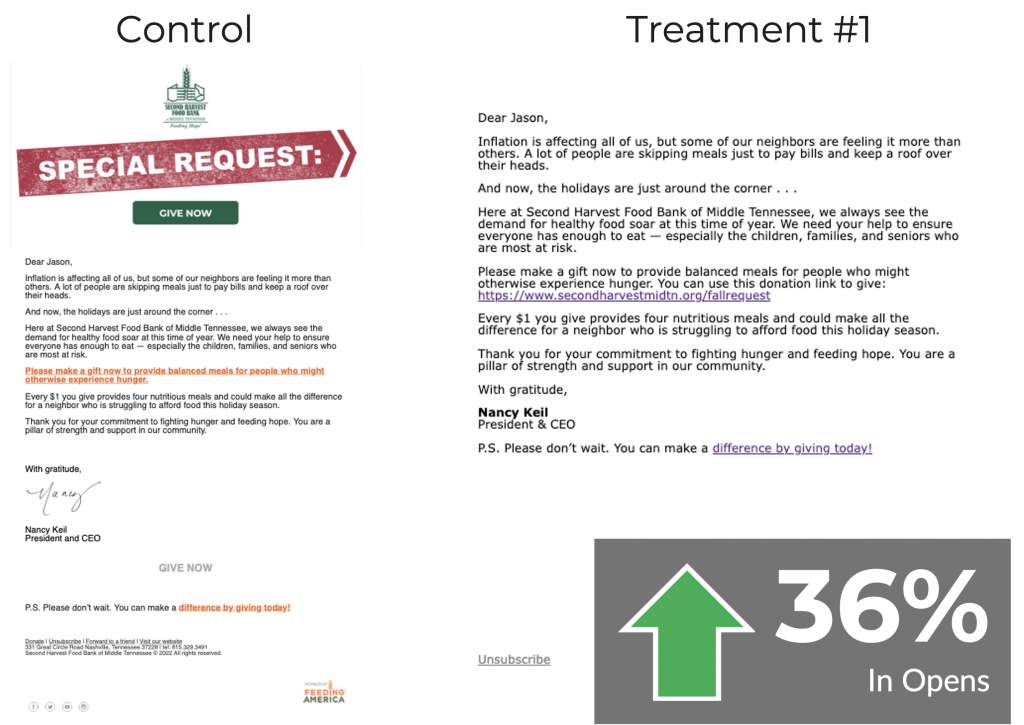

For this type of test, you’ll want to send the same exact copy, but one email will be heavy on attractive HTML design elements while the other email will use a plain text design, giving the appearance that someone just opened up their email app and wrote you an email directly. The thinking that drives this test is the idea that people give to people not machines or just because an email looks great. We hypothesized that, out of the two emails, the plain text version would lead to a higher delivery rate, higher click rate, and therefore an increase in donations.

After testing we found that removing the design elements from the email and sending it in a plain text format led to a 36 percent increase in email opens and a 16 percent increase in clicks.

Test #2: Copy Length

When sending emails, how much copy is enough? How much copy makes it too long?

According to Hubspot, an ideal length of a “sales email” is between 50-100 words… That’s short! If you’re reading up to this point, I’ve already surpassed 200 words in this post!

I’d recommend that you take their advice with a grain of salt, because there are different types of marketing tactics and different types of consumers in the world – what works best for a sales email won’t necessarily correlate into a fundraising email campaign.

One of the best ways to approach this test is by creating one email with long-form copy (about 250 words or more) and testing it against an email with short-form copy (between 50-150 words). Before you can decide if short or long copy works best for your organization, you’ll have to make a decision about the email’s goal. You’re going to want to make sure that your copy takes your reader down a path that converts them with a call-to-action (CTA). If you don’t have a goal for your email – why are you sending it?

Test #3: CTA

If you test different copy-lengths with an email campaign and don’t come to any conclusion, then it may be time to test the CTA itself.

When testing a Call to Action, it’s important to ask the question, “What kind of CTA does my email campaign have?” Does the CTA utilize a button? Hyperlinked text? A graphic? All of the above? Is the CTA happening too soon in the message or is not happening at all?

You’ll want to run this test multiple times. Here are some good ways to test your CTA:

-

Hyperlinked Text: Is your copy compelling enough for the reader to click? Test different phrasing.

-

Hyperlinked Graphics: Are you receiving clicks on an image asking for a donation? If not, you’ll want to try to test that element against an HTML button.

-

HTML Button: Maybe the color of your button factors into how many clicks it receives – try testing various colors.

-

Multiple Buttons: Do you have more than one donation button in your email? Track which placement receives more clicks.

-

Too Many Options: Does your email campaign contain all of the elements above? Try removing one element from each campaign to see what gets the best response from your audience.

Test #4: Timing

Any post discussing A/B testing wouldn’t be complete with discussing the timing of your email campaigns, so we’ll do that too! A common question people ask when creating fundraising emails is, “What day of the week and at what time do you send your emails?”

Let’s break this down… Email is designed to be both timely and effective. So, if you’re not reaching your audience during a time while they’re working through their inbox, they could miss the opportunity to respond to a time sensitive cause.

To help guide your timing tests, begin by looking over historic email data. As you review the data, record the following: the day of the week each email was sent and how each one performed with opens, clicks, and conversions. As you look at your data, patterns may emerge. If, for example, your conversion rate is better on Tuesdays, but your open rate is better on Thursday, then you may want to send solicitation emails on Tuesdays and supply your audience with information emails on Thursdays. The main thing to keep in mind when testing email timing is that looking for patterns is a great starting point for reaching your audience more effectively.

Conclusion

There are a lot of options to consider when you’re beginning to decide that A/B testing is right for your organization. Above all, you want to make sure that what you’re testing can help you reach a solid conclusion. And, you’ll be able to determine that solid conclusion by staying focused on the data behind the decisions along with the outcomes each test produces. Regardless of your level of experience with A/B testing, it is an invaluable tool that can (and will) improve your email marketing.

Have you tried A/B testing in your email marketing? What worked for you and what didn’t? Let me know in the comments below!